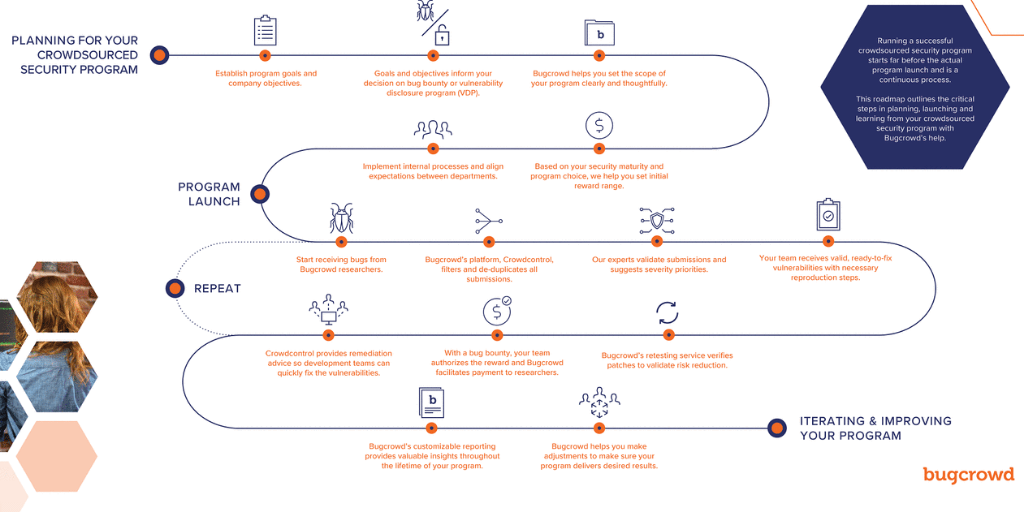

Running a successful bug bounty program starts far before the actual program launch date, and is a continuous and iterative process of improving and growing over time. The workflow and lifecycle of a managed bug bounty program can typically be broken down into the following five parts: scoping, implementation, identification of findings, remediation of issues, and then iterating based on learnings.

Scoping:

The absolute first thing to do when preparing to run a successful bounty program, is to make sure you have sufficient resources to allocate towards operating and maintaining the program, as well as getting internal buy-in from all the relevant stakeholders. This may not feel like it’s part of “scoping: in the technical sense, but it is a necessary and integral part of ensuring the long-term success of your program. Make sure you know, and everyone within your organization knows, who is responsible for which parts of the program (who validates, who remediates, who rewards, and so on).

Next, in terms of technical scoping, it’s important to get a full and accurate understanding of:

- What your available attack surface is;

- More specifically, your clearly defined scope for this engagement/program.

By understanding your scoped attack surface, you can more readily and thoroughly convey to researchers where to focus their efforts, as well as providing documentation that will enable them to be more successful. One of the great paradoxes to crowdsourced security is that we, somewhat counter-intuitively, want researchers to find and identify as many vulnerabilities as possible – and providing them with as many resources as we’re able is an important component to creating and driving that success.

Finally, as the last stop on the scoping path, we also need to ensure that we establish clear goals and objectives for the program and set expectations around what qualifies as a successful program. Once these parameters have been established, we’re ready to begin writing the program brief by documenting the targets, focus areas, salient information, and incentives.

#ProTip: When writing your program brief, a good rule of thumb is to always review the document as if you were a researcher yourself. If you were offered this scope and these rewards, would you want to participate on this program? And does the program include all the relevant information you’d need to be successful? If the answer is ever anything less than an emphatic “yes”, then that’s a good indication that things could likely use some tweaking.

Implementing:

Once your program brief has been clearly and thoughtfully articulated (as well as having been stress tested), it’s important to spend some time on ensuring that once the program goes live, that things, as much as possible, go accordingly to plan. This includes setting up integrations (Jira, ServiceNow), building out workflows, creating templates, and so on. So that when a finding arrives there’s a process established for all involved parties.

Additionally, in terms of implementation, and something that is likely informed by the previously determined goals and objectives, it’s important to also determine how/where your program will live: will this be a public program that’s open to the world, or a private engagement that’s limited to a smaller subset of researchers? Is it intended to be a VDP, “if you see something, say something”, or is our goal to always have researchers consistently/actively testing the target? Depending on how we answer those questions, the format, content, and implementation of the program can vary fairly dramatically. But regardless of how and where, the most important thing to keep in mind here, is that everything works better when everyone is on the same page.

Identification of Findings:

Once our program is live, in almost every case, submissions will typically start rolling in almost immediately! Which, non-coincidentally, is why there’s so much emphasis on ensuring we’ve got a plan and process as early in the launch process as possible. Having established and formalized workflows allows us to act effectively and efficiently as soon as the program starts to see traction, which as noted above, can (and often does) happen very rapidly.

As with many things, how we start is of paramount importance, and no role is more critical in this phase of execution than that of the team (or individuals) who will be evaluating the findings as they arrive – performing, in the Bugcrowd vernacular, “triage and validation”. This first touch is core to building a sustained and meaningful relationship with researchers. At Bugcrowd, our ASE team performs this part of the equation – processing through all new submissions, and ensuring that anything triaged is valid, in-scope, not-a-duplicate, and has sufficient replication steps. However, it’s important to be aware that the work doesn’t stop here; there’s one additional step needed to accept a finding, and the most crucial one as far as researchers are concerned: rewarding the report at a level consistent with what was outlined on the program brief.

On the Bugcrowd platform, rewarding and accepting a finding is a fairly straightforward process and one that is made substantively less time consuming than it would be in the wild, largely thanks to the first-level work done by our ASE team to triage, etc. All that needs to be done is to review the priority of the finding, move it to an accepted state, and pay according to the rewards structure that was previously outlined in the scoping step. Once accepted and rewarded, the submission will follow your internal workflows to ensure it arrives at the desk of an engineer for remediation.

Remediation of Issues:

Once valid bugs are fed back into your development lifecycle and prioritized by criticality (and in relation to existing development workload), it is crucial to also work with the development team(s) to not only fix/remediate the bugs at hand, but also to ensure the same coding mistakes don’t happen in the future. To help here, Bugcrowd provides free remediation advice within the platform so you can work with your developers to understand, mitigate, and avoid introducing future security vulnerabilities into your product(s).

As a note, once the finding has been remediated, do be sure to advise the researcher of this, as many researchers will go back and re-test the finding, trying additional attack vectors, as well as helping to ensure there are no regressions, etc.

Learning + Iterating:

Because, as we mentioned at the start of this post, running a bounty program is continuous and ongoing process, it is further important that we consistently stay on top of our program to reassess results and outcomes as they apply to our goals and objectives, and then adjusting our program to meet these targets by increasing scope, advising researchers of changes and updates to the program as they happen, adding rewards, placing more researchers on the program, creating additional programs as needed, and so on.

Throughout this process with Bugcrowd, our Account Management and Program Operations teams will work collaboratively with you and your team, to provide experienced input and guidance along the way. Together, we’ll work to improve and grow both your program(s), as well as your overall security maturity as an organization – taking and applying the learnings from each step of the process, and then iterating onward and upward!

——

When launching a crowdsourced program, Bugcrowd helps you every step of the way.